Since there are millions of best practices and case studies in conversion rates optimization and A/B testing, described in blogs, you still shouldn’t trust every word they say about A/B testing and implement every growth hack that has worked for others. Let’s speak in this article about the reasons not to act this way and how to deal with the best practices.

If you let your company follow the chain “find a suitable case study” – “copy an A/B testing case” – “make a mistake”, you’ll simply lose your business! Testing out ideas found on the Internet (or worse – implementing them at once) is a usual mistake among the beginners in A/B testing.

1. Most of Conversion Rates Optimization Case Studies Don’t Show Absolute Numbers

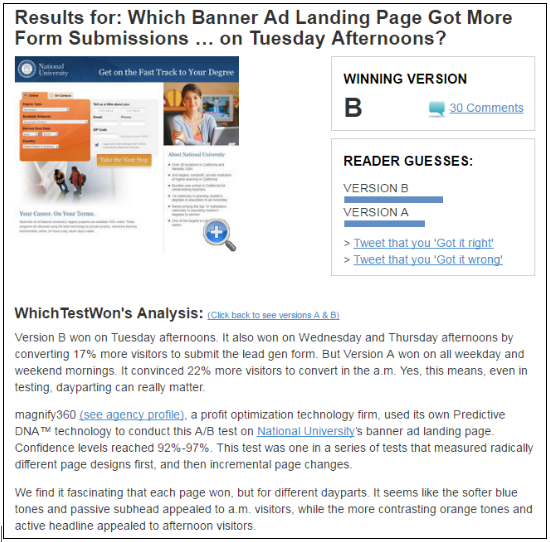

Usually, CRO case studies you may find on the web contain only two metrics: the conversion rate growth (in percents) and the level of statistical significance. But these relative metrics are not enough to make considered conclusions, so such case studies aren’t trustworthy! You can’t tell if the results are reliable unless you know the absolute number of conversions that proved positive changes and specifics of users, described in a case study. It’s really rare to find these figures in most of the case studies. For example, the next case study about optimization of the button from Whichtestwon is not very credible, since there is no absolute numbers (a number of conversions and a sample size).

Looking at this case study we’ve found there is no info about the number of conversions per each variation. And it’s gonna be an ordinary story for the case studies described on the web. I hope you won’t implement such experiments into your business not to harm it.

2. A/B Testing Best practices are Often Based on Random Results

Don’t trust conversion rates studies based on random results. To protect yourself from a poor quality of information you need to know basic things about the scientific approach for conversion improving. The absolute numbers are what you need to know reading the next best practice in conversion rates optimization. Namely, a number of conversions per each variation and a length of the experiment. To understand better the terminology and specifics of A/B testing as the scientific approach to CRO, be sure to read this worthy article written by Peep Laja on statistical significance and validity in testing. You have to remember that statistical significance isn’t all that anyone needs to stop a test.

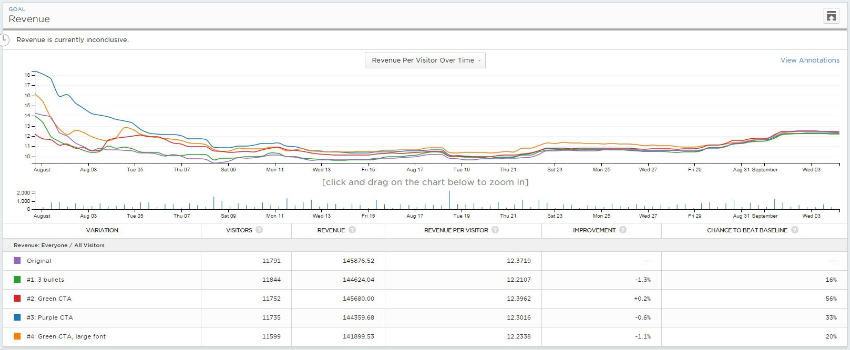

Look at this case study based on the A/B experiment launched by Peep Laja for one of his E-commerce clients. The test was conducted for 35 days with desktop targeting only and lasted until every variation was close to 3000 conversions. (The spoiler: it’s the proper case study and there was no winning variation :))

Let’s look at the details:

- After first 4 or 5 days, the blue curve shows the winning variation. A lot of wally marketers stop their experiments there (Fail).

- After 7 days: the blue one is still winning but with the little relative difference.

- After 14 days: the orange one (the curve #4) is leading!

- After 21 days: the 4th curve is still leading!

- End: no difference.

So, you should consider only those A/B testing experiments that were conducted for at least 3 or 4 weeks. Also, don’t consider A/B testing best practices with less than 100 conversions per variation.

3. There Should Be Analysis Behind Any Hypothesis

Finally, you have to remember that every website or business is unique. And you need to understand the logical chain behind any A/B testing experiment you read about to know why certain hypotheses have been chosen. The most of the authors do not provide information about the research they have conducted before their experiment, how they found the problems and why they are important to test.

To make a choice whether you should grab any concept presented in a case study and A/B test the same element on your website, you need to know why the author made the calls he or she did.

And, of course, don’t forget that different audiences have different demographic characteristics, preferences, and behavior, so you have to make your own research via your analytics provider and launch your own A/B testing experiment to get really reliable results.

How to Get the Best Out of A/B Testing and Other Conversion Rate Optimization Case Studies?

Think of each A/B testing case study as about your inspiration for a future analysis and A/B testing of your own website. Finding a cute A/B testing case study isn’t just a signal to copy the design or to start the same A/B testing experiment immediately. If you discovered that something worked well in any case study you found, think of it as of a signal to dig into your analytics, then to form your own hypothesis, create your own content variations and launch your A/B testing experiment (e.g. using Maxymyzely’s web analytics and A/B/N testing solution).

Be sure to protect your company from blind applying of the best practices based on clumsy A/B tests! Even after finding a worthy conversion rates optimization best practice, you should perform the following steps:

- make your own research,

- formulate and test a hypothesis via an A/B/N experiment

- enjoy improved conversion! 🙂