As our web-based world is rapidly developing day-to-day and the number of potential competitors is rising every minute, any responsible marketer already knows about A/B testing as the sure method of user experience improvement, which leads to better conversion rates. But, has anyone already heard about the existence of such a CRO method as multi-armed bandits?

In our today’s article we’ll speak about such methodics of conversion rate optimization as the multi-armed bandit, comparing it to the simple A/B testing. Firstly, let’s get some details about the first and the second method and then compare them.

A one-tailed test allows you to determine if one essence is better or worse than another one but not the both. A direction must be chosen before the test.

The limitations of A/B testing

Despite the fact that A/B testing (also known as a ‘one-hand bandit’ algorithm) is the known method of negotiation the impact of personal bias, it has some limitations, such as:

- While test is running the users have to see a ‘bad version’ 50% of the whole testing time.

- The method includes a human factor: when to stop the test and which version is the best.

- The method requires lots of samples and lots of rounds to go.

“We #&@!ing lost $400,000 because you %@#$ing forgot the A/B test was still %@&#ing running?!?!?!” (C) Anonimous

Bandit Algorithms for Conversion Rate Optimization

A two-tailed test allows you to determine if two website essences are different from one another. A direction does not have to be specified before the test. The fact is that automatic conversion optimization will take into account the possibility of both, a positive and a negative effect. The testing round in the case of two-tailed testing consists of the exploration and exploitation phases, the combination of which helps to find the best working balance.

Practically and plainly speaking it means that the best performing page is shown for the maximally possible number of times, while the worst performing pages almost are not shown.

You have to consider that there are different bandit algorithms, and there are better and worse ones among them. Let’s make the short review of the various multi-armed bandits.

| Group of methods/strategies | Pros | Cons |

| The iterative strategies (dynamic programming, Gittins indices, UCB1) | Provably optimal strategies | Limited horizon, a short sequence of steps, the computational complexity of the problem when you zoom, very slow convergence |

| Linear reward actions | Dynamic weight update, fast conversion | Sensitive to the initial approximation |

| Heuristic methods (e-greedy, Softmax, exp 3) | Not sensitive to an increase in the scale of the problem | More often lead to sub-optimal solutions |

The multi-armed bandit algorithm principles used in Maxymizely

As we can see, different algorithms have their specific pros and cons, therefore, in order to improve the reliability of results, at Maxymizely we use the combination of such methods as epsilon-greedy and linear reward-action. On the first stage, also named exploration phase, our algorithm uses the equal traffic distribution (10% of the whole traffic), and then (on the exploitation phase) the sample is distributed in such way that the most successful variations get the most of the traffic. Our system detects changes in conversion of each variation and adjusts the weight, according to the probability to win. We also take into account the speed of weight changes to compensate the errors.

During the first phase we’re using the principles of retraining, taken from the E-greedy algorithm, and during the second phase, the algorithm is using the linear reward-action method.

The linear reward-action is based on the principles of PID controllers and consists of two components: the differentiate component (D) is used to define the speed of change in probability to win; the proportional integral component (PI) is the current probability to win in a test. To evaluate the probability of winning we use the Bayesian approach. There is an example of a handy Bayesian calculator helping to visualise the estimate of the probability that A beats B given the data you have. In a low quantity of attempts it looks like a beta-distribution in terms of statistics, but on the large samples it’s a completely normal distribution.

Our algorithm is retraining up to 4 times a day, providing optimal solutions for maximization of gained profit. And if our algorithm considers that the variation has a 100% chance of winning, after a while it will get the 100% weight in the 90% exploitation phase in order to maximize your profit. So, we may certainly say that our algorithm isn’t an ordinary multi-armed bandit, it’s the multi-armed bandit on steroids! 🙂

The limitations of Maxymizely’s algorithm

As we can see above, generally, our multi-armed bandit exceeds the possibilities of the plain A/B testing in the sense of the fastest conversion increase. The only limitation you can face launching this method is the quantity of your traffic. The minimum amount of traffic you have to provide for every arm of a multi-armed bandit is 500 unique visitors.

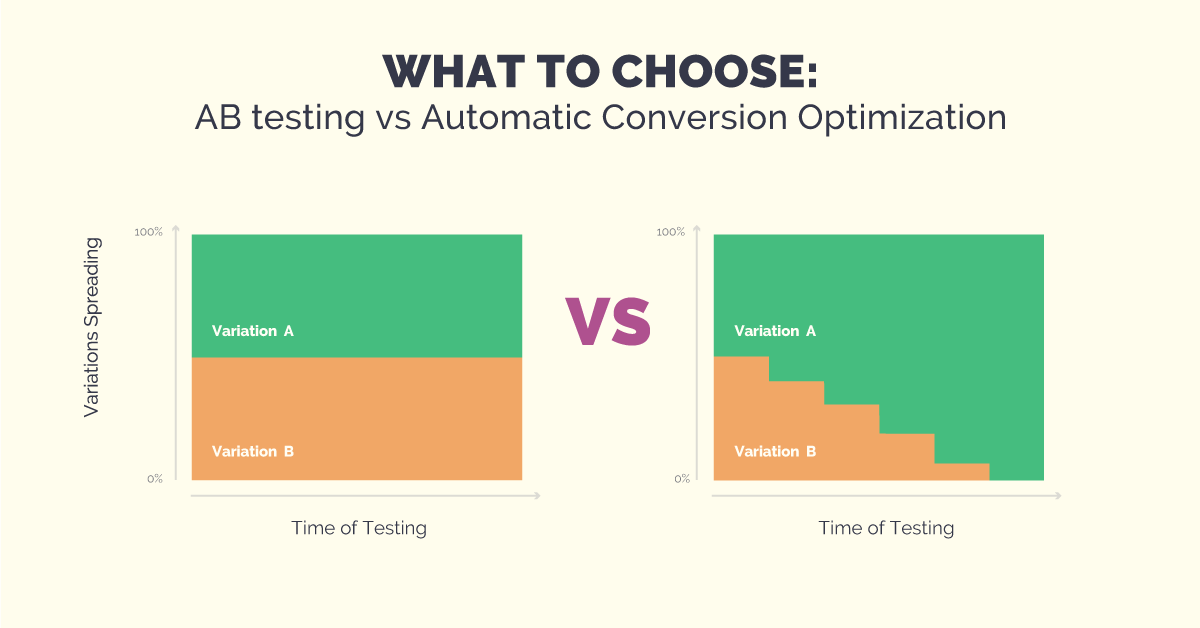

AB testing vs. Automatic conversion optimization

- Minimize losses: A/B tests send equal amounts of visitors to pages, no matter how well they perform. The Maxymizely’s bandit algorithm, on the other hand, will learn again and again to send visitors to the best performing page. In this sense, the bandit algorithm has an incontestable advantage over ordinary A/B tests. Also, bandits allow you to distinguish relatively similar performance between two versions of a page.

- Sample size: For multi-armed bandits you generally require fewer observations in order to come to a conclusion for results with the same level of confidence as an A/B test. The difference lies in different approaches to define the ‘winner’; and both of these statistical approaches are equally reliable.

- Easy to set-up: Certain conditions in setting up a bandit algorithm are difficult to implement, that’s why this method has been less popular before the start of the modern machine learning practices. While A/B testing has been used for over a century, bandit algorithms haven’t been used mainly because the calculation time is much longer than in an ordinary A/B test. However today the iteration of a Bayesian bandit takes less than a second for our computers, and this is why we are seeing the return in popularity for such optimization method. Easy to set up and available in our system.

If you have any questions on what of the methods (A/B testing or automatic conversion optimisation) would be better to choose for your business case, feel free to ask our experts in the comments section below.